AI For Human Reasoning

Civilization and technology have radically improved the human condition. Nonetheless, the world sometimes goes in directions which essentially nobody would prefer — e.g., nuclear arms races, unexpected financial crashes, predatory marketing, or ubiquitous political misinformation.

This is superficially puzzling: outcomes are determined by people's actions, so why don't we simply avoid the bad outcomes? It's a challenge to our collective competence.

Looking to the future and at the biggest scales, there is a real risk that the world goes in directions that essentially nobody wants. Sometimes this breeds apathy. But often, it imparts an appetite, and energy: if only we were empowered with greater skill and wisdom... what then?

Uplifting society to the point where we reliably avoid these errors feels like a big ask. But AI might do a great deal to unlock better tools which can help us on many of these axes. The broad, fuzzy domain of potential AI capabilities that can improve the quality of understanding and coordination, more than they make the world complex, we’re calling AI for Human Reasoning.

This approach prioritizes developing AI that augments human understanding and decision-making rather than replacing it, ensuring that our collective wisdom can outpace the challenges we create.

Contemporary AI makes this vision newly practical:

- Cheap access to large amounts of cognitive labour could unlock useful approaches that were previously unrealistic

- By providing systems that can be held to more stringent standards of impartiality than any human, while providing complex judgements, we may permit trust in places where it was previously hard to reach

- AI agents can now handle significant software engineering tasks, and so the time from concept to functional prototype has shrunk dramatically. This allows for the rapid creation of ambitious human reasoning empowering tools.

The ceiling that technology might eventually permit society to reach here is very high. But we don’t need to wait for superintelligence to get started. There are many tools which are likely buildable with today’s AI systems, that would already help meaningfully on these dimensions.

Examples of tools include:

-

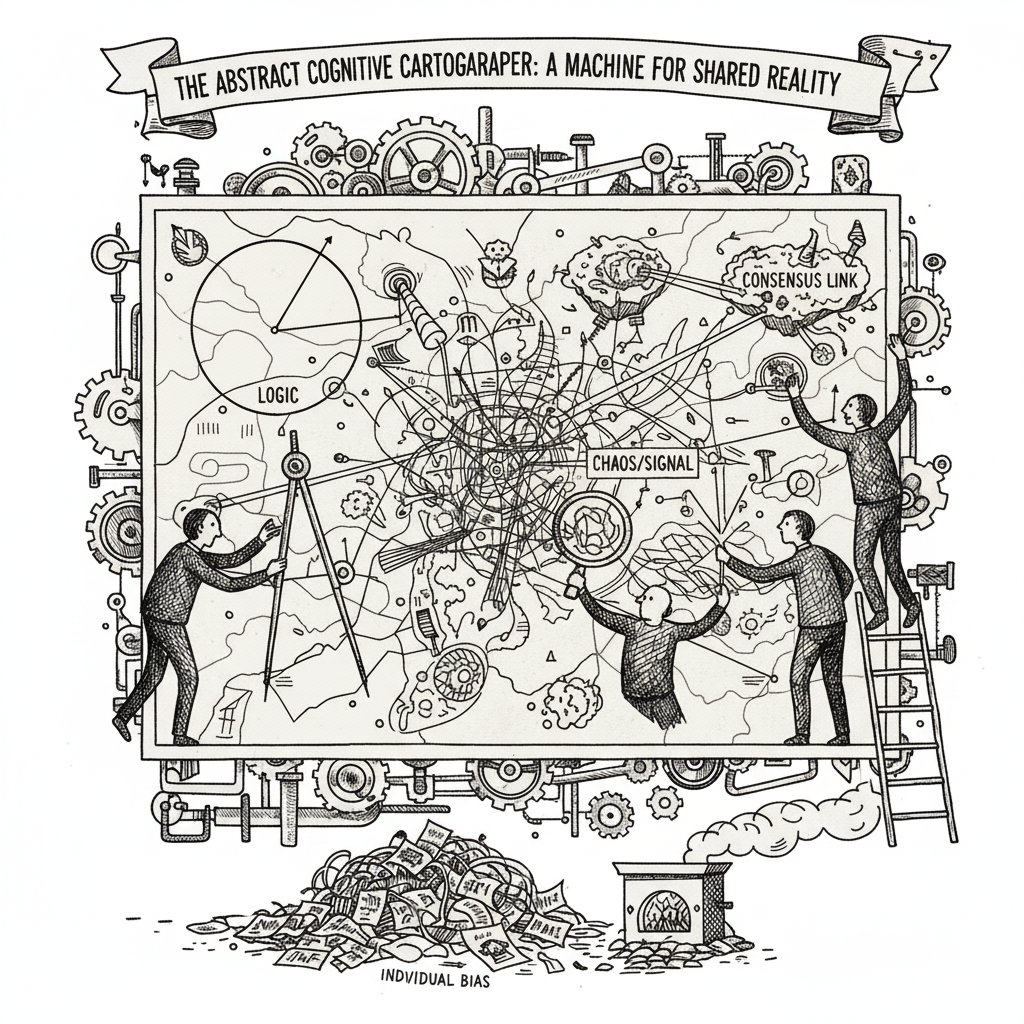

Helping navigate complex information landscapes: To address the growing difficulty of staying informed and support better collective decision-making, we can develop a suite of epistemic tools. These systems would empower users by helping them fact-check claims, interpret public forecasts, and automatically detect manipulative rhetoric. Extending concepts like "Community Notes" to all forms of content would be a core part of this effort.

-

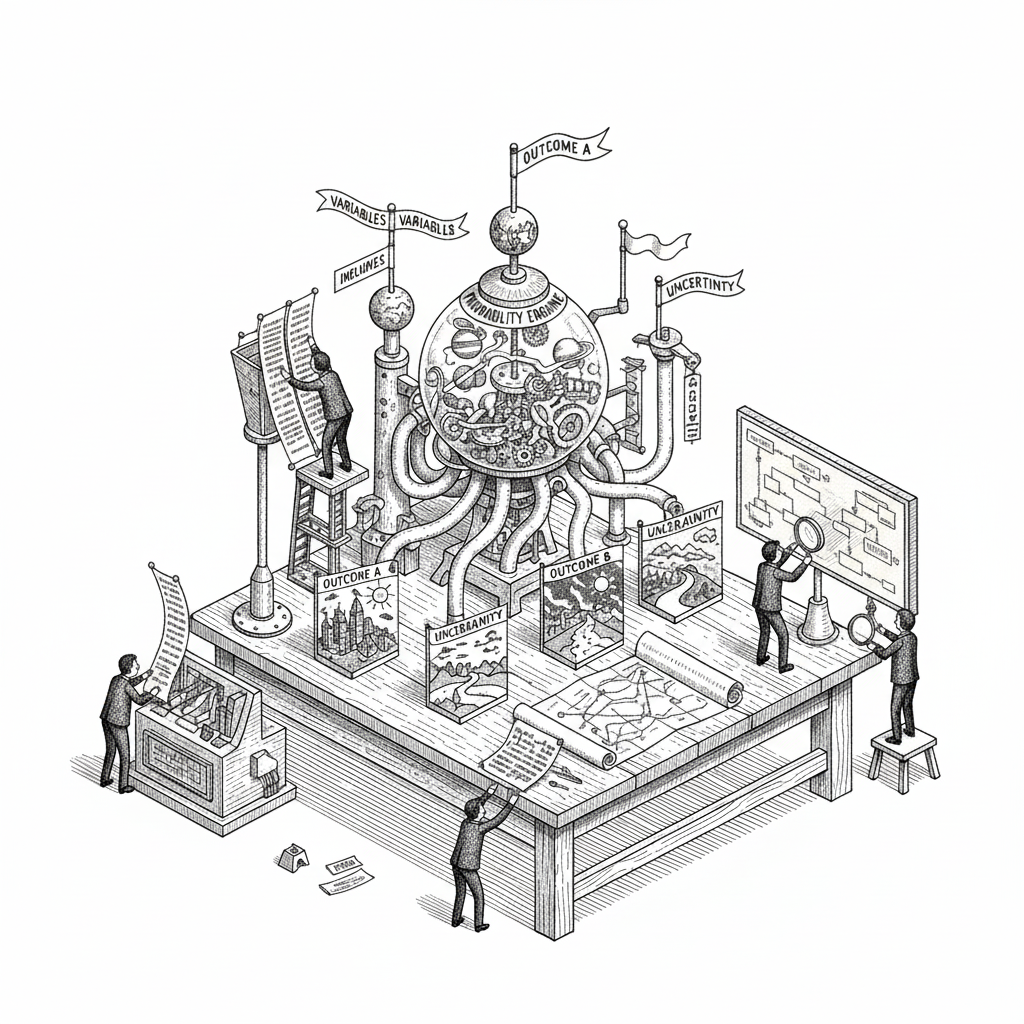

Forecasting and scenario planning: To help humanity navigate the challenges of advanced AI, we can build tools that augment and improve human forecasting. These AIs would assist in key tasks like formulating questions, generating scenarios, and eliciting implicit models from experts. This approach not only makes human forecasting more efficient and comprehensive but also provides a human-inspectable foundation for any future fully-automated forecasting systems.

-

Bargaining and negotiation: To avert catastrophes driven by "race dynamics," we can develop AI tools for high-stakes negotiations between entities like nations or corporations. These systems could act as trusted, neutral mediators to find positive-sum solutions or as delegates to massively expand negotiation bandwidth. Building the foundational technological infrastructure for these tools is a crucial and high-leverage first step.

-

Wise decision-making: To prevent catastrophic errors, we can develop AI tools that promote wiser decision-making. These systems could function as advisors, helping people clarify their core preferences, navigate emotional biases, and act as a "sanity-checker" to flag potentially disastrous choices before they're finalized.

-

Evaluations for epistemic virtue: To build more justified trust in AI systems and improve human coordination, we can develop benchmarks to measure their "epistemic virtue" (e.g., honesty, clarity, cooperativeness). Since the AI field excels at optimizing for what can be measured, these evaluations would provide a clear path to training future models to be more deeply and verifiably trustworthy.

-

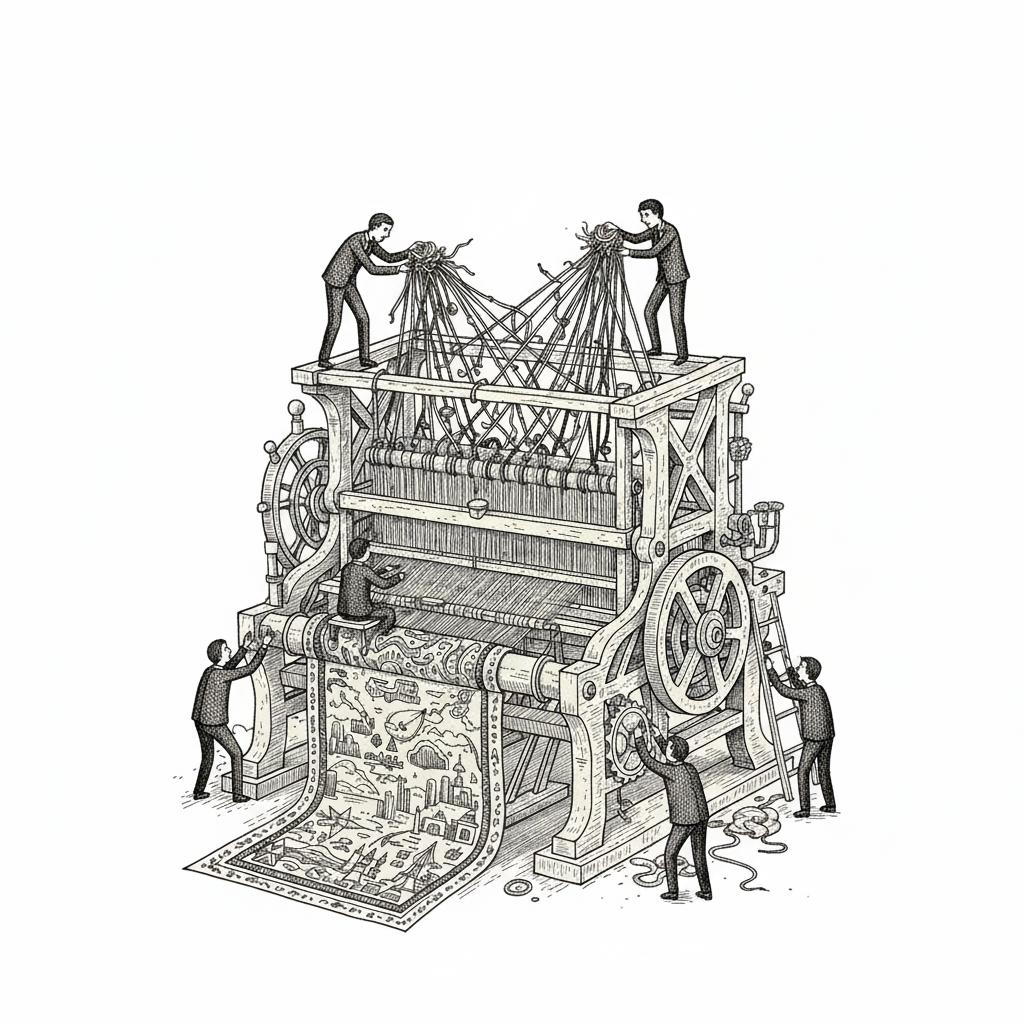

Coordination and consensus-finding: To overcome collective action problems that disempower large groups, we can use automated systems for finding consensus and analyzing data while preserving privacy. Furthermore, creating platforms that enforce contractual obligations can foster more stable, large-scale cooperation and empower these broader communities.

As AI capabilities grow, we are far from guaranteed to navigate the transition wisely. The most catastrophic failures in history, and the existential risks we face in the future, often stem not from pure malice but from people and institutions making critical mistakes, even by their own lights.

Improving human reasoning is therefore not just an optimistic goal; it is a critical safeguard. By providing tools that help us avoid unforced errors, clarify our true priorities, and understand the consequences of our actions, we can better navigate the challenges AI presents.